Technical debt represents the corners you cut short while developing a feature or a system. That happens no matter how hard you try because life is life, and there are delays, deadlines, budgets, and people, including developers (yes, that’s you and me).The most crucial point is understanding that you cannot avoid technical debt altogether, so it’s better to embrace that fact and learn to live with it instead of fighting it. From that point forward, you can only try to limit the amount of technical debt you, or someone else, generate and ensure to always refactor some of it over time each sprint (or the unit of time that fits your projects/team/process).One way to limit the piling up of technical debt is to refactor the code often. So, factor the refactoring time into your time estimates. Another way is to improve collaboration between all the parties involved. Everyone must work toward the same goal if you want your projects to succeed.You will sometimes cut the usage of best practices short due to external forces like people or time constraints. The key is coming back at it as soon as possible to repay that technical debt, and automated tests are there to help you refactor that code and eliminate that debt elegantly. Depending on the size of your workplace, there will be more or less people between you and that decision.

Some of these things might be out of your control, so you may have to live with more technical debt than you had hoped. However, even when things are out of your control, nothing stops you from becoming a pioneer and working toward improving the enterprise’s culture. Don’t be afraid to become an agent of change and lead the charge.

Nevertheless, don’t let the technical debt pile up too high, or you may not be able to pay it back, and at some point, that’s where a project begins to break and fail. Don’t be mistaken; a project in production can be a failure. Delivering a product does not guarantee success, and I’m talking about the quality of the code here, not the amount of generated revenue (I’ll leave that to other people to evaluate).Next, we look at different ways to write tests, requiring more or less knowledge of the inner working of the code.

Testing techniques

Here we look at different ways to approach our tests. Should we know the code? Should we test user inputs and compare them against the system results? How to identify a proper value sample? Let’s start with white-box testing.

White-box testing

White-box testing is a software testing technique that uses knowledge of the internal structure of the software to design tests. We can use white-box testing to find defects in the software’s logic, data structures, and algorithms.

This type of testing is also known as clear-box testing, open-box testing, transparent-box testing, glass-box testing, and code-based testing.

Another benefit of white-box testing is that it can help optimize the code. By reviewing the code to write tests, developers can identify and improve inefficient code structures, improving overall software performance. The developer can also improve the application design by finding architectural issues while testing the code.

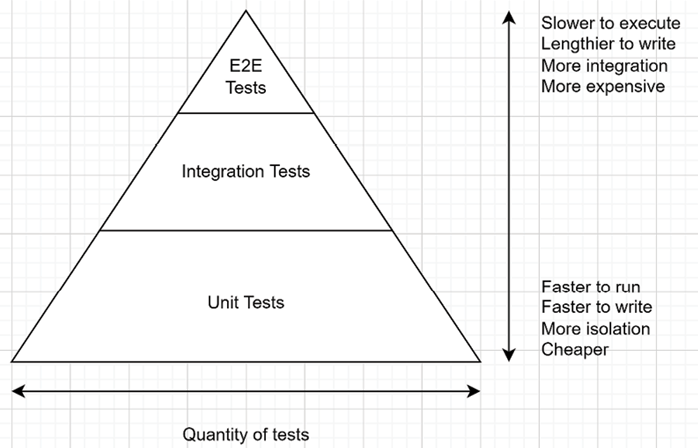

White-box testing encompasses most unit and integration tests.

Next, we look at black-box testing, the opposite of white-box testing.