Black-box testing is a software testing method where a tester examines an application’s functionality without knowing the internal structure or implementation details. This form of testing focuses solely on the inputs and outputs of the system under test, treating the software as a “black box” that we can’t see into.The main goal of black-box testing is to evaluate the system’s behavior against expected results based on requirements or user stories. Developers writing the tests do not need to know the codebase or the technology stack used to build the software.We can use black-box testing to assess the correctness of several types of requirements, like:

- Functional testing: This type of testing is related to the software’s functional requirements, emphasizing what the system does, a.k.a. behavior verification.

- Non-functional testing: This type of testing is related to non-functional requirements such as performance, usability, reliability, and security, a.k.a. performance evaluation.

- Regression testing: This type of testing ensures the new code does not break existing functionalities, a.k.a. change impact.

Next, let’s explore a hybrid between white-box and black-box testing.

Grey-box testing

Grey-box testing is a blend between white-box and black-box testing. Testers need only partial knowledge of the application’s internal workings and use a combination of the software’s internal structure and external behavior to craft their tests.We implement grey-box testing use cases in Chapter 16, Request-Endpoint-Response (REPR). Meanwhile, let’s compare the three techniques.

White-box vs. Black-box vs. Grey-box testing

To start with a concise comparison, here’s a table that compares the three broad techniques:

| Feature | Whitebox Testing | Blackbox Testing | Gray-box Testing |

| Definition | Testing based on the internal design of the software | Testing based on the behavior and functionality of the software | Testing that combines the internal design and behavior of the software |

| Knowledge of code required | Yes | No | Yes |

| Types of defects found | Logic, data structure, architecture, and performance issues | Functionality, usability, performance, and security issues | Most types of issues |

| Coverage per test | Small; targeted on a unit | Large; targeted on a use case | Up to large; can vary in scope |

| Testers | Usually performed by developers. | Testers can write the tests without specific technical knowledge of the application’s internal structure. | Developers can write the tests, while testers also can with some knowledge of the code. |

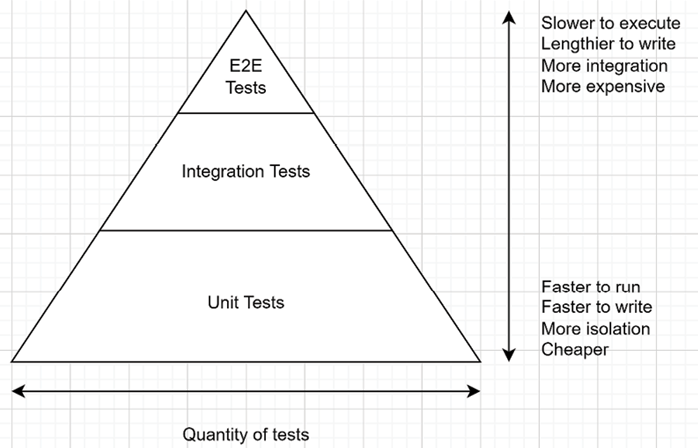

| When to use each style? | Write unit tests to validate complex algorithms or code that yields multiple results based on many inputs. These tests are usually high-speed so you can have many of them. | Write if you have specific scenarios you want to test, like UI tests, or if testers and developers are two distinct roles in your organization. These usually run the slowest and require you to deploy the application to test it. You want as few as possible to improve the feedback time. | Write to avoid writing black-box or white-box tests. Layer the tests to cover as much as possible with as few tests as possible. Depending on the application’s architecture, this type of test can yield optimal results for many scenarios. |

Let’s conclude next and explore a few advantages and disadvantages of each technique.